Creative Exploration with Reasoning LLMs

Large Language Models (LLMs) generate coherent text but tend to produce homogeneous outputs when prompted for creative content. To overcome this, I introduce Lluminate, an LLM based evolutionary algorithm that explores diverse creations by combining evolutionary methods with principled creative thinking strategies. The findings demonstrate that integrating evolutionary pressure with formalized creative strategies enables sustained open-ended exploration (illumination) of latent creative spaces, offering new possibilities for assisted creative exploration, countering homogenization, and perhaps even enabling the discovery of new ideas.

Prompt: make an interesting shader

Baseline

(Hover to view)

If you wanted the code for a shader animation, the baseline (above) represents what you could get by asking a chatbot such as ChatGPT to "make an interesting shader". The results are fairly homogeneous with a bias towards saturated colors and rotating spiral patterns. This is the classic case of the language models defaulting towards average solutions. Lluminate can evolve a more diverse set of results including varied shapes, structures and colors.

Novelty analysis

The system explicitly searches for a set of novel solutions and works for any type of text or image output. These are represented in a semantic space using CLIP (Contrastive Language-Image Pretraining), an AI model that understands relationships between images and text. This allows us to measure how different and innovative each solution is compared to others. We can analyze the increase in novelty for the run that produced the above shaders.

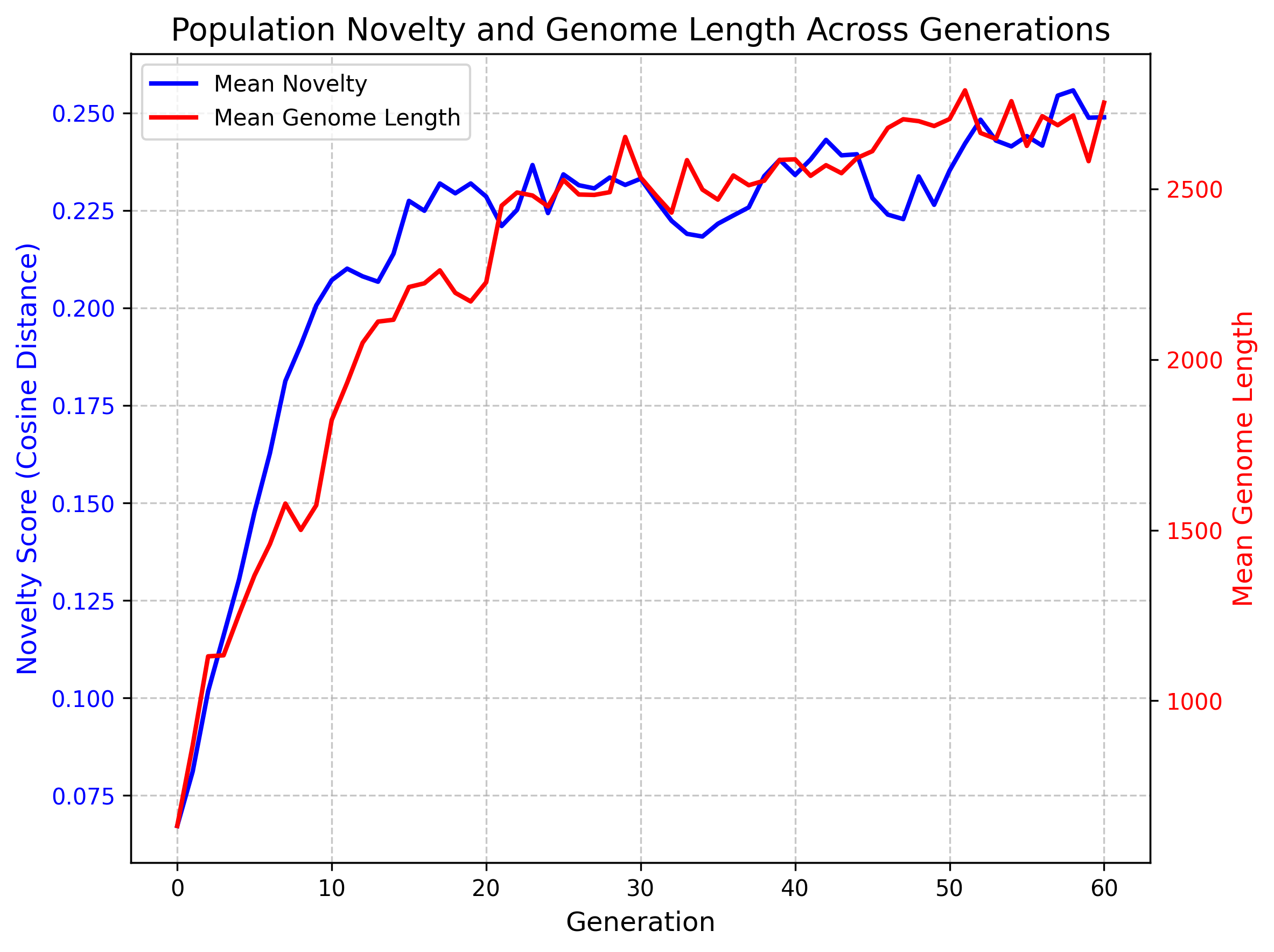

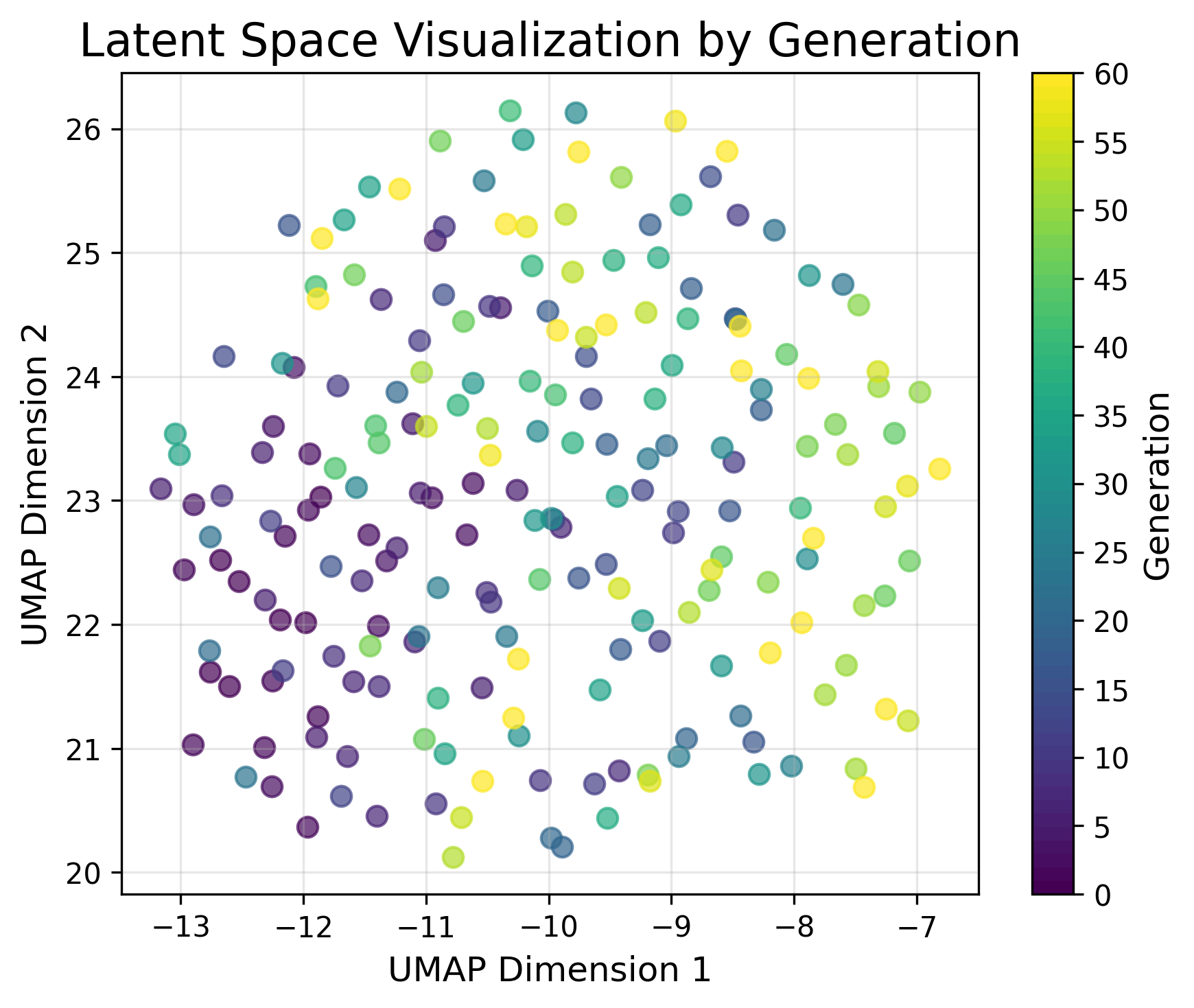

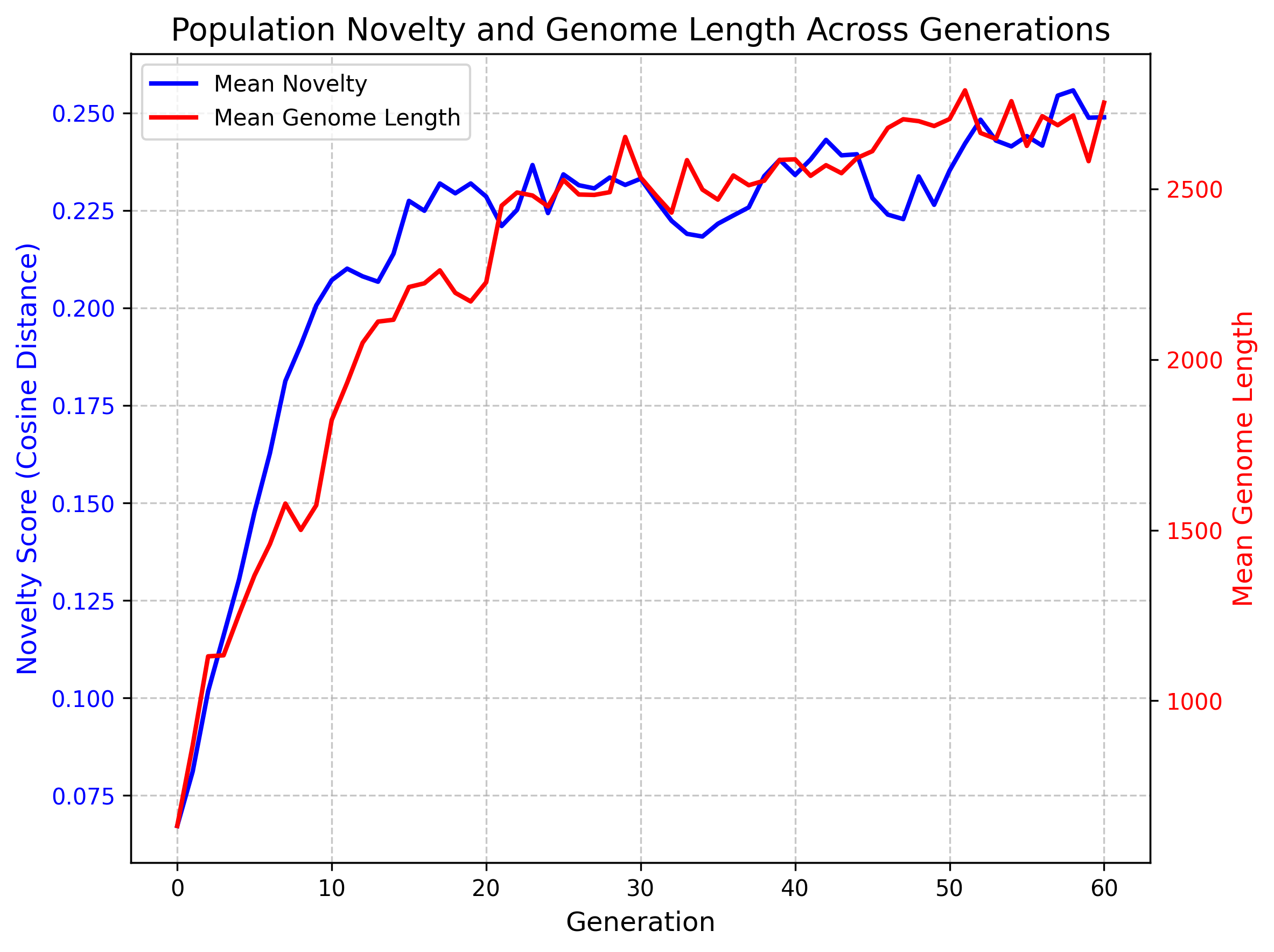

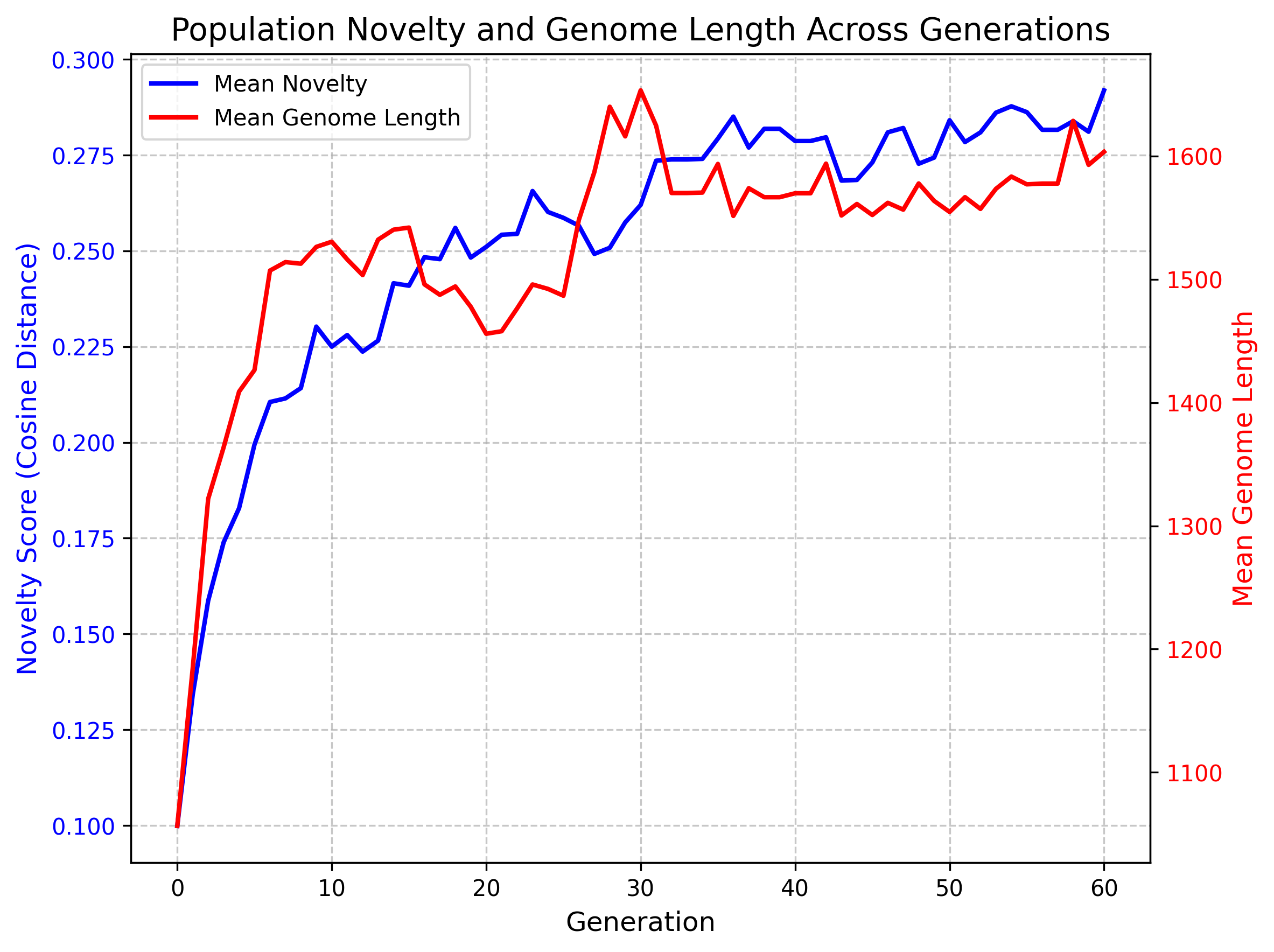

This figure illustrates the evolutionary progression across 60 generations, viewed through complementary perspectives. The novelty metric (left-blue), measuring average cosine distance to k=3 nearest neighbors, shows two distinct phases: rapid initial exploration (generations 0-15), steady incremental growth (15-60). Genome length (left-red) - which is the length of the code in characters - follows a similar trajectory. The UMAP visualization (right) confirms this progression spatially, showing early generations (purple) concentrated in the lower left, with a gradual expansion toward upper and rightward regions in later generations (yellow). Together, these patterns demonstrate sustained exploration of latent space with intriguing dynamics between novelty discovery and genomic complexity.

Prompt: a creative time display.

Baseline

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Component will load when visible

Hover to see an explanation. Creating a creative clock display is a standard intro to creative programming class assignment due to its vast creative potentials. The baseline clocks are pretty much all the same exact radial format with minor color variation.

Image descriptions are evolved and converted into images with the Flux-dev model. The baseline images have a consistent bobby white and neon blue color palette. The evolved images are more diverse and include a variety of styles and colors although remain limited by the capacity of the image generator.

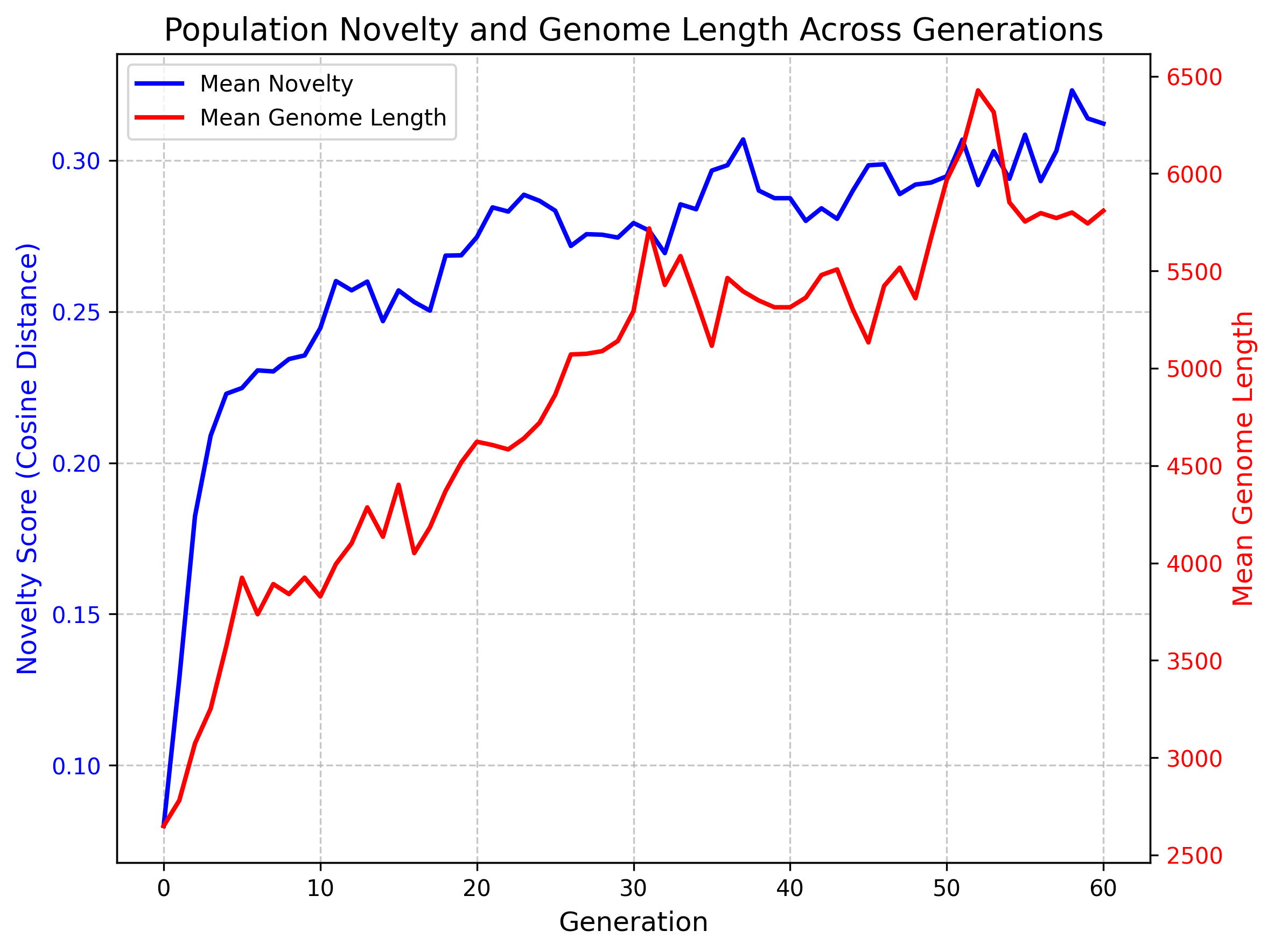

All domains exhibit similar novelty dynamics and remain unsaturated after 60 generation each.

Shaders

p5js Clocks

Image Prompts

Algorithm

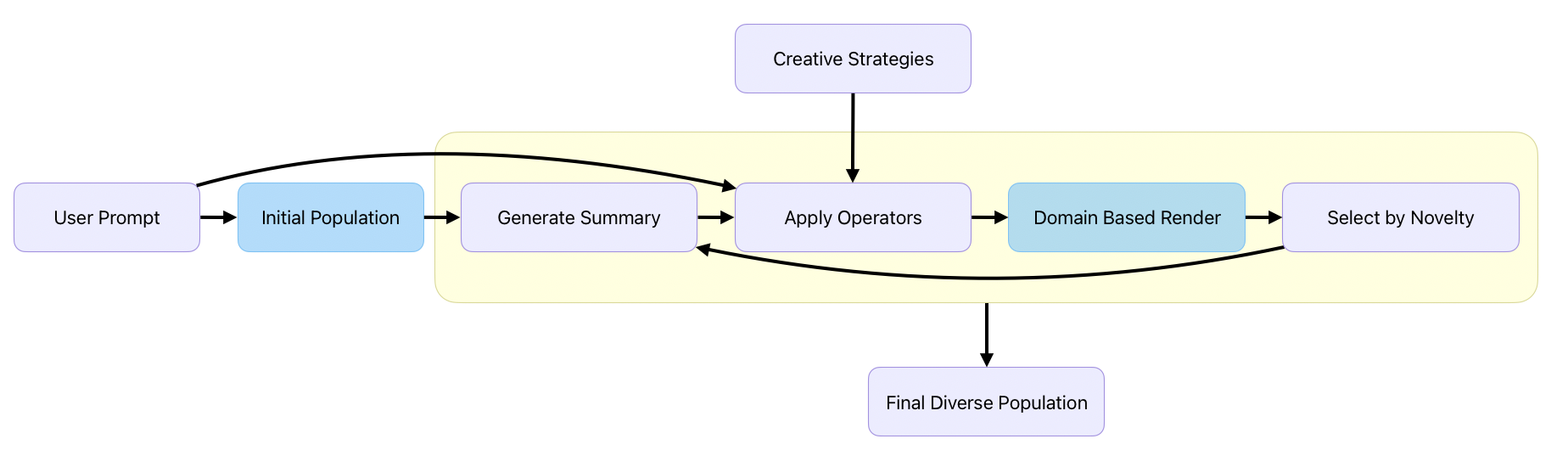

Source CodeThe algorithm is a combination of evolutionary computation principles with large language models to create an open-ended discovery system. All mutations happen on the genetic code and are rendered as a phenotype for embedding.

- Summary Generation: Create a summary of the current population to provide context for future generations

- Creative Strategy Injection: Randomly select and apply creative thinking strategies to guide the LLM

- Evolutionary Operators: Create new artifacts by modifying or combining existing ones via prompts using the current summary and strategies.

- Embedding and Novelty Calculation: Measure how different each artifact is from others using embeddings (average distance to k nearest neighbors)

- Population Management: Select the most diverse artifacts for the next generation

Creative Strategies

The classic prompt of "think step by step" famously improved the ability of language models to solve logical problems. To test the ability to improve creative thinking, the reasoning model was prompted to follow the steps from one of the creative strategies before creating new artifacts.

Oblique Strategies

Process:

- draw random card: Select a random cryptic directive such as 'Honor thy error as a hidden intention,' 'Use an old idea,' or 'What would your closest friend do?'

- interpret directive: Interpret how this seemingly unrelated directive could apply to your problem

- apply insight: Apply the resulting insight to develop an unexpected solution

Forced Connections

Process:

- select domains: Identify your primary domain and select a distant, unrelated domain

- map elements: Identify key elements, principles, or structures in both domains

- transfer elements: Transfer elements from the random domain to the primary domain

- synthesize: Create a coherent synthesis incorporating elements from both domains

Replacement Template

Process:

- identify trait: Identify the key trait T of subject P that should be highlighted

- find symbol: Find a symbol S that is universally associated with trait T

- create context: Create a context where trait T is essential for S to function

- replace: Replace S with P in this context

SCAMPER Transformation

Process:

- select base: Select a base concept, product, or service to transform

- choose operations: Select 2-3 SCAMPER operations that seem most promising (Substitute, Combine, Adapt, Modify, Purpose, Eliminate, or Reverse)

- apply operations: Apply your chosen operations to transform the base concept

- synthesize: Integrate the transformations into a coherent new concept

Assumption Reversal

Process:

- list assumptions: Identify fundamental assumptions or 'rules' in the domain you're exploring

- select assumption: Choose the most fundamental or taken-for-granted assumption

- reverse assumption: Reverse this assumption or imagine it doesn't exist

- explore consequences: Develop a practical idea based on this reversal

Conceptual Blending

Process:

- select inputs: Select two distinct but potentially complementary conceptual spaces

- map crossspace: Identify correspondences and shared structures between the input spaces

- blend selectively: Selectively project elements from both inputs into a blended space

- develop emergent: Develop the unique emergent properties that arise in the blended space

Distance Association

Process:

- anchor concept: Establish the central concept you're working with

- generate associations: Generate concepts at varying distances from the anchor (near, medium, far)

- select medium: Select medium-distance concepts that are not obvious but still connectable

- develop association: Create a meaningful connection between the anchor and the medium-distance concept

Results

Test were conducted on the shader and clock (website with p5.js library) domains. Each configuration was run with the 3o-mini model from OpenAI. It is worth noting that small change in cosine distance can correspond to large perceptual changes. Two prompt-modes for evolution were tested, variation means a random artifact is mutated while "creation" means a wholly new artifact is created from scratch given the current population summary.

Comparing final population novelty and genome length (source code string length) of each configuration. Each was run with the 3o-mini model from OpenAI with 30 generation and population size of 20 and 10 new children per generation. All tests were repeated three times. Novelty is the average distance to 3 nearest neighbors for each member of final population. Above is the fold increase over the baseline of random population.

Each artifact is tagged with the creative strategy used to create it, which allows is to compare the improvement by each strategy. Below is the percentage of each strategy used in population.

Strategy Usage Comparison Between Website and ShaderArtifact Domains. The scatter plot shows the percentage usage of different creative strategies across two domains, with website usage on the x-axis and ShaderArtifact usage on the y-axis. Error bars indicate standard deviation across multiple experimental runs. The diagonal dashed line represents equal usage in both domains. The plot reveals no significant correlation between strategy usage patterns across domains (r=-0.02, p=0.963), with some strategies showing domain-specific preferences. Notably, Replacement Template is used substantially more in ShaderArtifact generation, while Conceptual Blending shows higher usage in website generation. Other strategies like SCAMPER Transformation, Distance Association, Oblique Strategies, and Assumption Reversal demonstrate more balanced usage across both domains.

Insights

Creative strategies boost diversity: Formalized creative thinking strategies significantly increased novelty metrics compared to baseline outputs.

Variation beats creation: Modifying existing artifacts produced more diverse results than generating new ones from scratch.

Domain-specific effectiveness: Replacement Template excelled for shaders while Conceptual Blending performed better for websites.

Crossover amplifies novelty: Combining elements from multiple solutions yielded the highest novelty scores in the experiments indicating constraints can help counter the innate laziness.

Novelty-complexity link: More complex artifacts (longer code) explored more novel territory in the latent space. The most diverse run using crossover and creative strategies produced outputs nearly twice as long on average and the runs without.

Reasoning has diminishing returns: Higher reasoning levels didn't significantly increase diversity, although it may improve quality.

Context awareness matters: Population summaries consistently improved performance, highlighting the importance of evolutionary context.

LLMs default to average: Without evolutionary pressure, language models produce homogeneous solutions rather than exploring creative boundaries.

General applicability: Similar novelty dynamics persisted across all tested domains (shaders, clocks, architecture), remaining unsaturated after 60 generations.

Background

Genetic algorithms (GAs) mimic natural selection to efficiently explore complex solution spaces [1] . While extensively applied in neural architecture search and procedural content generation, their integration with LLMs for creative exploration is still emerging.

Recent evolutionary computation has explored quality-diversity algorithms, seeking diverse high-performing solutions rather than a single optimum. Novelty Search [2] rewards behavioral diversity, while MAP-Elites [3] maintains an archive of diverse solutions along dimensions of interest. These approaches have demonstrate how rewarding behavioral novelty rather than fixed goals can drive ongoing innovation, mirroring biological evolution's diversity.

Open-endedness expands on the insights of novelty search and aims to create systems that continually produce novel, increasingly complex forms [4] . Soros et al. [5] bridged computational creativity and open-endedness research although it has not been applied to language models.

For large language models prompting has emerged as a crucial skill in effectively leveraging LLM's. This involves crafting prompts, often by providing context, examples, or specific instructions. Techniques like few-shot learning [6] and chain-of-thought prompting [7] have shown significant improvements in task performance. Recently, OpenAI released their reasoning that use reinforcement learning to generate prompts. This is very effective for convergent logic problem like programming and math but did worse on creative tasks. In contrast to the expansive creativity of open endedness, language models has proven to increase teh homogenization of ideas [8]

A key and novel component in this work is the systematic application of formal creativity theories to facilitate creative thinking. Drawing from Margaret Boden's conceptual spaces theory [9] and her foundational work on creativity and artificial intelligence [10] , I implement strategies pushing language models beyond conventional outputs. I also incorporate Brian Eno's Oblique Strategies [11] , Edward de Bono's lateral thinking [12] , Arthur Koestler's bisociation theory [13] , and Fauconnier and Turner's conceptual blending [14] —approaches proven effective in human creativity but not previously applied systematically to language model generation.

Limitations and Future work

Optimized strategies: The creative strategies were effective but arbitrarily created and there was no thorough evaluation of their ideal structure or content. This sets the stage for potentially co-evolving strategies alongside artifacts that adapt to specific domains. These evolved "chain of creative thought templates" could even be reusable in direct prompting.

Quality and Constraints: This algorithm can fail to produce valid outputs when the constraints are not innately satisfied by the LLM. Currently, it lacks mechanisms to repair infeasible solutions or enforce minimal quality criteria. Potential improvements include applying selection criteria to the reproducing population or leveraging the multimodal capabilities of models to "see" outputs via image inputs. At the time of writing, image capabilities were not yet available in the o3-api, though this will likely change soon. In the future, if costs decrease, the entire population could be presented as images, allowing selection pressure to be applied based on phenotypes (visual outputs) rather than textual summaries of genomes.

Concept / Implementation barrier: Large outputs become costly to generate for every generation, an alternative approach is to only generate a shorter description of the central idea for the object and then do a run with a more powerful model for the final generations. Analogies to how most projects get evaluated at the concept phase. The main challenge is that many concepts generated by the LLM are impractical, diverge greatly when generated or are hard to articulate (such as shaders which are hard to describe in text). Potential future work could look to bridge this gap.

Creative Reasoning Analysis: It would be illuminating to view and analyze the model's reasoning on the different strategies to better understand how it is interpreting the creative strategies. The API from OpenAI does not enable viewing the reasoning output, but open source reasoning models are rapidly improving.

References

-

Holland, J. H. (1975). Adaptation in natural and artificial systems: An introductory analysis with applications to biology, control, and artificial intelligence. University of Michigan Press.

-

Lehman, J., & Stanley, K. O. (2011). Abandoning objectives: Evolution through the search for novelty alone. Evolutionary computation, 19(2), 189-223.

-

Mouret, J. B., & Clune, J. (2015). Illuminating search spaces by mapping elites. arXiv preprint arXiv:1504.07467.

-

Stanley, Kenneth O. "Why open-endedness matters." Artificial life 25.3 (2019): 232-235.

-

Soros, Lisa, et al. "On Creativity and Open-Endedness." arXiv preprint arXiv:2405.18016 (2024).

-

Brown, T. B., et al. (2020). Language models are few-shot learners. Advances in Neural Information Processing Systems, 33, 1877-1901.

-

Wei, J., et al. (2022). Chain-of-thought prompting elicits reasoning in large language models. arXiv preprint arXiv:2201.11903.

-

Anderson, Barrett R., Jash Hemant Shah, and Max Kreminski. "Homogenization effects of large language models on human creative ideation." Proceedings of the 16th conference on creativity & cognition. 2024.

-

Boden, M. A. (2004). The creative mind: Myths and mechanisms. Routledge.

-

Boden, Margaret A. "Creativity and artificial intelligence." Artificial intelligence 103.1-2 (1998).

-

Eno, B., & Schmidt, P. (1975). Oblique Strategies: Over One Hundred Worthwhile Dilemmas.

-

De Bono, E. (2015). Lateral thinking: Creativity step by step. Harper Colophon.

-

Koestler, A. (1964). The act of creation. Hutchinson.

-

Fauconnier, G., & Turner, M. (2008). The way we think: Conceptual blending and the mind's hidden complexities. Basic Books.