Dimensions of Dialogue

2018

A series of small experiments inspired by early proto-writing systems such as cuneiform and hieroglyphs. Here, new writing systems are created by challenging two neural networks to communicate information via images. Using the magic of machine learning, the networks attempt to create their own emergent language isolate that is robust to noise. This is similar to a machine learning architecture called generative adversarial networks (GANs) except that the networks are collaborating and not competing.

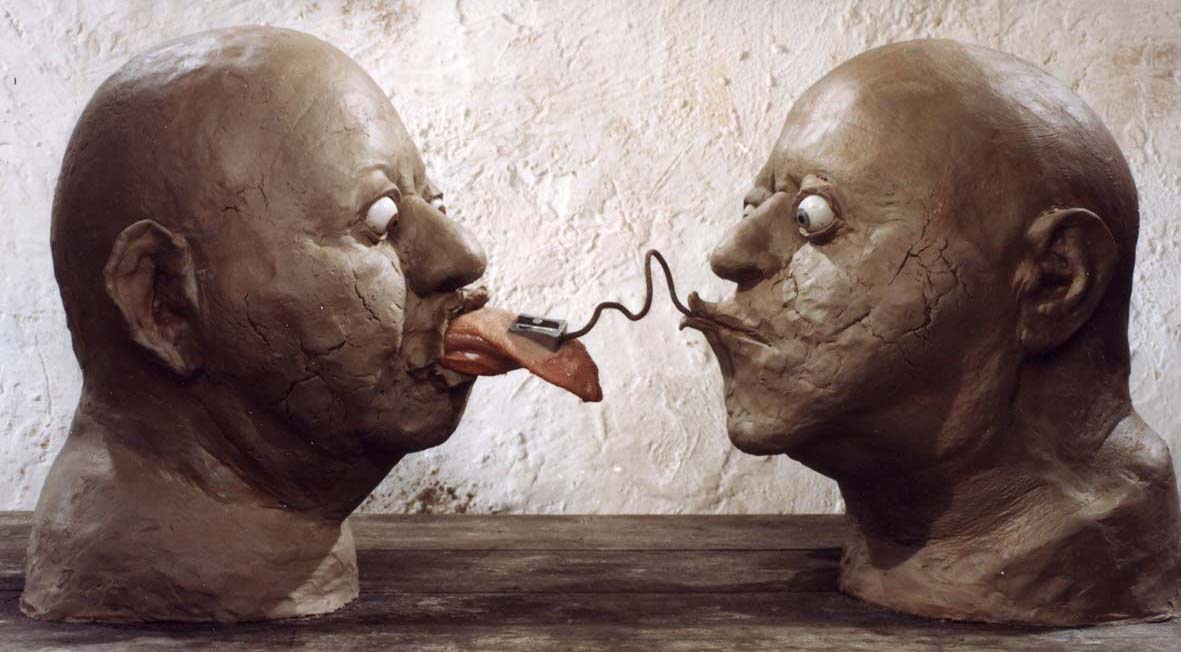

Above: two entities from Jan Švankmajer's Dimensions of Dialogue that have learned their own language.

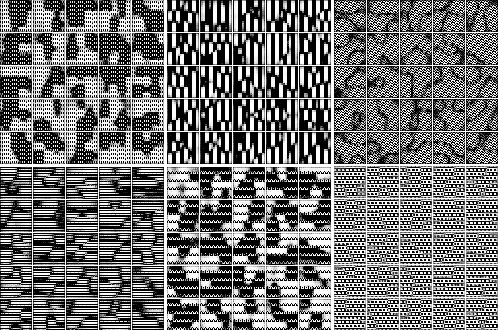

1) Learning a language of 100 characters

The 'information' the first neural network is communicating is a 100-length vector with one of the values as a one - ex: [0, 0, 1, 0]

Above are samples of twenty five characters from each of six different languages. Each language was produced by re-running the neural networks with the same parameters. Each language is a distinct, structured visual system, often with micro and macro structures. The larger forms are a method of being resilient to the noise. The size of the forms vary with the amount of noise.

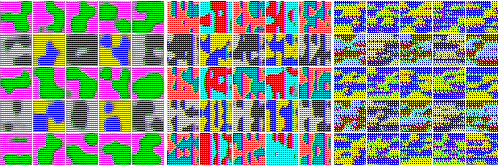

2) Learning languages with 10,000 characters in color

From left to right are: high, medium and low amount of noise. Adding color did not generally change the output and most languages became dual colored.

3) Learning data vectors

Here, rather than each image being one character (one-hot encoding), each image encodes a vector of information. With 20 dimensions there are over a million possibilities - ex: [1, 0, 1, 0] The animations represent the evolution of the characters with training.

By examining the output of specific input we can try to understand how the languages work. Below the first three of the twenty dimensions are varied.

We can observe some patterns, for example, the first dimension of the second language is represented with a type of macron (bar above the letter).

4) Learning words

Next, the networks were challenged to learn not just distinct characters but English words. The words are fed through a word-to-vector library (trained on Wikipedia) before being given to the networks. These vectors are the 'ideas' that must be communicated.

The networks did extremely well, of the top 10,000 words only about 200 were lost in translation. Some examples: all-they, three-four, now-they, tuesday-monday, five-four, wednesday-monday, thursday-monday, however-not, six-four, took-after, see-what, does-you, came-when, march-june, come-what, july-june, although-though, april-june, already-have, though-even, others-they, ...

Similar concepts produce somewhat similar images

5) Lastly, learning language

A document to vector model was trained on 300,000 lines of movie dialogue and those vectors were given to the networks. Below are examples with random sentences from the database.

Any sentence can be given to the first network. By also giving components of the sentence we can try to see what's going on.

Reflections and future work

The intent of this work was for me to get my feet wet with custom neural networks and think more about their poetics. While the original intention was more about language I think it turned out to be more about typography and calligraphy. Writing systems, while emergent pieces of culture, are also results of optimization processes to produce images that are understandable (mutually distinctive) and robust to noise.

Further work with language could explore its adversarial and collaborative nature. We communicate information while also playing games with our language. Additionally, language is constantly evolving. Networks of neural networks could have each network acting in both collaborative and adversarial ways. One network may try to communicate information to one and disinformation to another using the same characters. The result could be an unstable, constantly shifting language with in-groups attempting to evolve their own language faster than it could be disseminated.

While these images a very low res and crude, I could possibly see custom designed QR codes being an interesting application. Also, it might be interesting to create a custom typeface optimized for JPEG compression and also readability.

Update - the earlier idea became Neural Tablets (Dimensions of Dialogue Pt 2)

Above: an example of a pair of neural networks that are adversarial or have simply not learned how to communicate well.